With climate change leading to rising sea levels and intensified storms, global coastlines are facing unprecedented erosion risks. However, accurately predicting coastline change is challenging, especially long-term trends. Recently, the ShoreShop2.0 international collaborative study evaluated the performance of 34 coastline prediction models through blind testing, revealing the current state of the art in coastline modeling.

The coastline is the dynamic boundary where land meets sea, constantly changing due to waves, tides, storms, and sea level rise. Approximately 24% of sandy coastlines worldwide are retreating at a rate exceeding 0.5 meters per year, and in some areas, such as the US Gulf Coast, the annual erosion rate is even greater than 20 meters.

Predicting coastline change is inherently difficult and complex, requiring consideration of the interplay of multiple factors, including wave energy, sediment transport, and sea level rise. Accurate predictions over long periods of time are even more challenging.

Modern coastline prediction models can be divided into three categories: one is based on physical simulation, such as Delft3D and MIKE21 based on fluid mechanics and sediment transport equations; one is a hybrid model that combines physical principles with data-driven methods, such as CoSMoS-COAST and LX-Shore; and the other is a data-driven model that relies entirely on statistical or machine learning techniques, such as LSTM networks and Transformer architectures.

Despite the wide variety of models, a lack of unified evaluation criteria has made performance comparisons difficult. Which model offers the most accurate predictions? The ShoreShop2.0 blind test competition provides a perfect opportunity for cross-disciplinary comparisons.

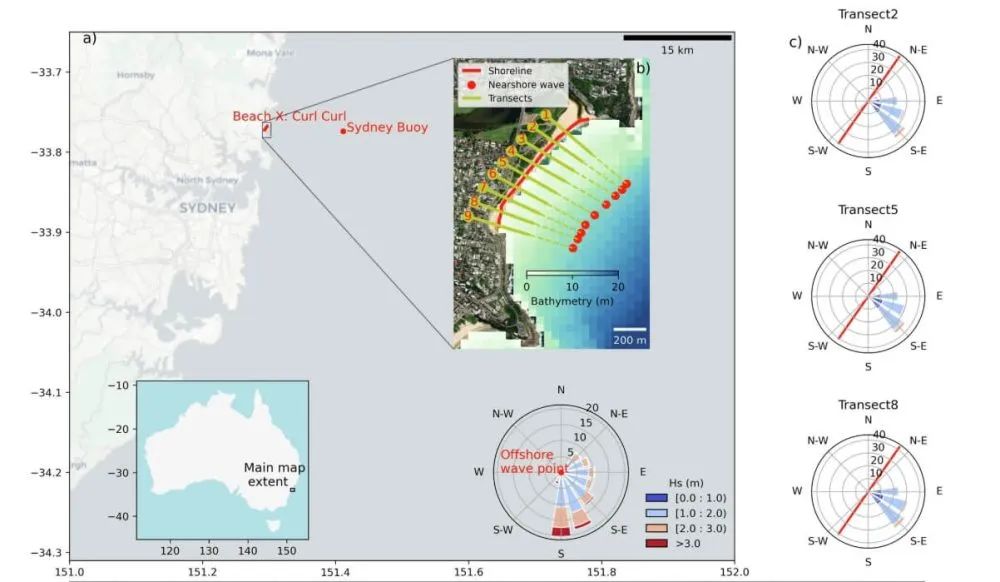

The ShoreShop2.0 international blind test competition is a highly rigorous form of scientific collaboration. Participating teams are only informed of the test site, which is a code name for a bay or beach. Key information such as its location and actual name are concealed to prevent prior knowledge from influencing model calibration. Furthermore, data is kept confidential in sections, with data from 2019-2023 (short-term) and 1951-1998 (medium-term) intentionally withheld. The models then predict short- and medium-term shoreline changes, ultimately verifying their accuracy using the confidential data. This design enables cross-disciplinary comparisons of coastal models under completely unknown conditions.

Thirty-four research teams from 15 countries submitted models, encompassing 12 data-driven models and 22 hybrid models. These teams came from institutions in the United States, Australia, Japan, France, and other countries. However, the submitted models lacked commercial models such as GENESIS and the physics-based models Delft3D and MIKE21.

A comparison revealed that the top-performing models for short-term, five-year forecasts were CoSMoS-COAST-CONV_SV (hybrid model), GAT-LSTM_YM (data-driven model), and iTransformer-KC (data-driven model). These models achieved root mean square errors of approximately 10 meters, comparable to the inherent error of 8.9 meters in satellite remote sensing coastline data. This indicates that for some beaches, the models’ predictive capabilities are approaching the limits of observational technology. Of course, other models were able to better capture coastline changes.

A surprising finding was that the hybrid model performed comparable to the data-driven model. CoSMoS-COAST-CONV_SV (hybrid model) combines physical processes and convolutional operations, while GAT-LSTM_YM (data-driven model) utilizes a graph attention network to capture spatial correlations. Both models performed well.

In terms of medium-term forecasts, the LX-Shore series (hybrid models) provides the closest predictions to measured data. By coupling alongshore and lateral sediment transport processes, these models maintain long-term stability while displaying the most consistent responses to extreme storm events with measured data. Predictions from these models indicate that a single severe storm can cause a transient shoreline retreat of up to 15-20 meters, with full recovery potentially taking two to three years. The CoSMoS-COAST series offers excellent stability, while other models may suffer from long-term drift and over-response.

Model results indicate that data quality is a key limiting factor in model performance. While satellite remote sensing data covers a wide area, its temporal resolution is low, typically weekly to monthly, making it difficult to capture rapid post-storm recovery. Furthermore, the instantaneous water edge is affected by wave runup and tides, leading to transient errors that can affect model predictions.

The study found that spatiotemporal data smoothing, such as the use of robust two-dimensional filtering techniques, can significantly improve model performance. Later, non-blind test models submitted reduced average error by 15% through optimized data preprocessing.

Robust 2D Smoothing is an advanced signal processing method specifically designed to process noise in coastline satellite data. Essentially, it is an iterative filtering algorithm based on weighted least squares, and is highly robust to outliers such as transient wave noise in satellite images.

Another factor crucial for model predictions is the accuracy of nearshore wave data. Currently, wave data suffers from various errors, including errors in nearshore conversion of global wave reanalysis data, biases caused by extracting wave parameters at the 10-meter isobath rather than the breaking zone, and the underestimation of the impact of extreme events by using daily average wave conditions. These errors can all affect model predictions.

For long-term predictions, most models rely on the classic Brownian law to estimate the impact of sea level rise. However, this law assumes an infinite and balanced sediment supply and ignores the effects of offshore sediment transport or human activities, such as beach nourishment. This can lead to significant model biases.

Based on the equilibrium profile theory, Brownian’s law provides a linear relationship between sea level rise and shoreline retreat. This theory posits that a coastal profile maintains an equilibrium shape. As sea level rises, the increasing accommodation space forces this equilibrium profile to shift landward to maintain its shape relative to the new sea level. Consequently, the theory posits that as the coastal profile shifts landward, the upper beach layer is eroded, and the eroded material is deposited offshore, causing the nearshore seafloor to rise, thereby maintaining a constant water depth. Brown’s law predicts that coastal retreat can be 10 to 50 times greater than sea level rise, depending on the slope of the beach.

This study provides a basis for selecting appropriate tools based on specific needs. Furthermore, data preprocessing is crucial; proper data processing can sometimes have a greater impact than the model itself. Building on the experience gained with ShoreShop 2.0, improvements can be made to satellite and wave data to enhance prediction accuracy. Furthermore, the uncontrollable effects of artificially disturbed beaches in long-term forecasts can also significantly impact prediction results. Furthermore, the lack of participation from commercial models such as GENESIS, Delft3D, and MIKE21 is a significant issue.

Guardians of the Blue Frontier: Frankstar’s 11-Year Mission to Protect Our Oceans and Climate

For over a decade, Frankstar has been at the forefront of marine environmental stewardship, harnessing cutting-edge technology and scientific rigor to deliver unparalleled ocean and hydrological data. Our mission transcends mere data collection—we are architects of a sustainable future, empowering institutions, universities, and governments worldwide to make informed decisions for our planet’s health.

Post time: Aug-11-2025